|

| LLAMA |

A small checklist that intends to make your LLM deployment easier.

This checklist is intended to help you get started with deploying your own Model or an open source one like Llama2

1. There is no perfect model that will fit your use case 100%

Although the excitement around Large Language Models (LLM) is justified, very few people actually know in advance which model is the best for them. A very good starting point is looking at a Model Card, if it exists. There are some models that perform some particular tasks better than others. For example, there are thousands of models that cater to Summarization use case. After selecting a particular model, you will need to validate whether it performs particularly well with your in house proprietary data.

2. You don't always need a GPU

A GPU is very useful when you need to work with a very large dataset and need a very high bandwidth for your use-case. The average price of a NVIDIA H100 is $30,000 which is around ₹ 2.5 million Indian rupees and that of NVIDIA A100 is $10000 which is around ₹ 8,33,000 Indian rupees.There are a lot of use cases that don't need a GPU. If you are experimenting with a small use-case, you can get most of a CPU to make your models work. You can easily spin up a CPU heavy virtual machine on a cloud provider.

3. Expect very high response time

The response time of a Large Language Model is very high. There are a lot of factors at play like below:

Parameters: Parameters in large language models (LLMs) are the variables that are learned by the model during training. They represent the weights and biases of the neural network that the LLM is composed of. The number of parameters in an LLM is a measure of its size and complexity. Larger models have more parameters and can learn more complex relationships between words and phrases.

Temperature: Temperature in LLM is a hyper-parameter that controls the randomness of the model's output. A higher temperature will result in more creative and imaginative text, while a lower temperature will result in more accurate and factual text.

K inputs: K inputs in LLM refers to the number of tokens that the model considers when generating the next token. This is a hyper-parameter that can be tuned to control the creativity and diversity of the generated text.

Prompt: A prompt in LLM is a piece of text that is used to guide the model to generate a specific output. Prompts can be simple or complex, and they can include instructions, questions, or any other type of text that provides the model with context.

|

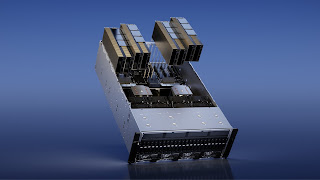

| Nvidia H100 |

The output of a Large Language Model depends on many factors. It also depends on your infrastructure and system architecture. It is a matter of fact that models running on a GPU have lower inference times than equivalent models running on a CPU.

Note : Depends on underlying Hardware of CPU/GPU

4. Use existing tools as much as possible, if that fails accept new ones

There are pretty amazing deployment tools like Langchain, that help in making LLM deployment easier. You can also use your existing Kubernetes clusters for the same. Every cloud vendor is now making it easier to deploy Machine Learning model quickly and efficiently.

5. Secure your LLM - MLSecOps and Model Bias

MLSecOps, or Machine Learning Security Operations, is a discipline that combines cybersecurity practices with ML operations to protect models, data, and the ML infrastructure. MLSecOps is concerned with identifying and mitigating the unique security risks associated with machine learning systems, such as adversarial attacks, data poisoning, and model bias.

Security and Bias Prevention should not never be an afterthought

Model bias refers to when a machine learning model systematically makes errors or exhibits unfair behavior towards certain group. Model abuse refers to the malicious use of AI/ML models to cause harm.

These are real and new problems and the best minds around the world are trying to solve them in innovative ways. Always Learn and Improve.

0 comments:

Post a Comment

What do you think?.