|

| Ollama.ai |

Ollama.ai is an excellent tool that helps you to run Large Language Models (LLMs) locally on your computer like Llama2.

In this article, I decided to test whether Ollama can work with my consumer grade GPU - MSI GTX Super 1660

My Desktop configuration is as follows:

- Ubuntu 22.04.03LTS Linux

- 24 GB DDR4 RAM

- Intel i3-8100 @3.60GHz with 4 Cores

- MSI GTX Super 1660 with 6 GB DDR6 RAM

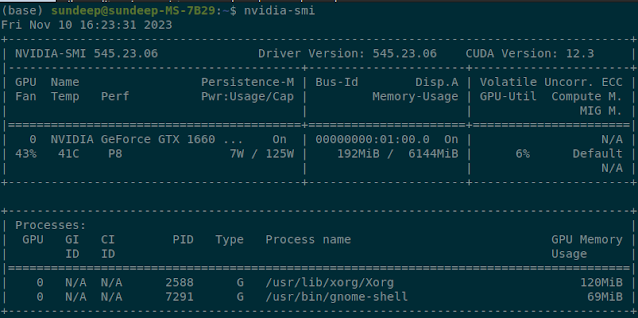

Before we start, we need to make sure that we have the greatest and the latest NVIDIA drivers and NVIDIA CUDA drivers: https://developer.nvidia.com/cuda-downloads. The installation was easy and you need to validate whether your GPU drivers are working by using nvidia-smi command as below:

If you do not have a Graphics Card, Ollama can run in CPU only mode too

|

| nvidia-smi command used to validate whether your GPU has the correct NVIDIA drivers |

As you can see above, my NVIDIA graphics card has been detected and is ready to use.

We are going to use NVITOP to measure GPU Performance. It is one of the best free tools to measure GPU performance for free

You can install Ollama fron this official Ollama download link. As of November 2023, you can install Ollama only on a Mac or a Linux machine. Windows support will come soon.

You can now download the llama2 model by entering the following command in your terminal:

ollama pull llama2Similarly, if you want the Mistral model, you need to enter:

ollama pull mistralThere is a comprehensive list of models that you can use on Ollama Website

Ollama has a REST API to run and manage Machine Learning models. You can call the API as below:

curl http://localhost:11434/api/generate -d '{

"model": "llama2",

"prompt":"What is Life?"

}'

As you can see above, we are using the llama2 model.

We can also use Ollama to work with a language model like The Mistral 7B model released by Mistral AI by giving the model parameter as mistral.

Calling Ollama API with Mistral 7 b model:

We will use the following prompt

{

"model": "mistral",

"prompt":"What is the capital of the United States of America?",

"stream":false

}The response was:

{

"model": "mistral",

"created_at": "2023-11-21T07:06:57.825617661Z",

"response": "The capital city of the United States of America is Washington, D.C.",

"done": true,

"context": [

733,

16289,

28793,

28705,

1824,

349,

272,

5565,

302,

272,

2969,

3543,

302,

4352,

733,

28748,

16289,

28793,

13,

1014,

5565,

2990,

302,

272,

2969,

3543,

302,

4352,

349,

5924,

28725,

384,

28723,

28743,

28723

],

"total_duration": 3314902170,

"load_duration": 2814112001,

"prompt_eval_count": 20,

"prompt_eval_duration": 152161000,

"eval_count": 16,

"eval_duration": 335794000

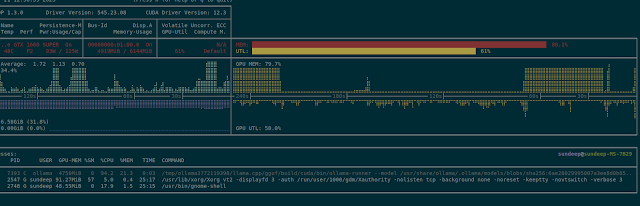

}During the test, I had kept nvitop running in another terminal:

|

| NVITOP command output to monitor GPU (Click to Zoom) |

As you can see the GPU Memory shot up to almost 5GB. This is because, 7B parameter models generally require at least 8GB of RAM. The actual size of the model was around 4.1GB.

You can similarly download other models and work with Ollama.ai. You can use chat to send your prompts as shown below:

You can invoke the chat interface by entering the following in the terminal:

ollama run mistral

0 comments:

Post a Comment

What do you think?.